Docker Interview Questions and Answers

These questions are targeted for Docker (a set of platform as a service (PaaS) products). You must know the answer of these frequently asked Docker interview questions to clear a Job interview. Development of ASP.NET Web API and Spring Boot MicroServices with Docker is very popular so you can find these technical questions very helpful for your next interview.

1. What is Docker?

Docker is an open-source Platform that provides the capability to develop, test, ship and run your application in an isolated environment. This isolated environment is called container. Docker enables you to keep your application separate from your Infrastructure and it reduces the delay between developing your application and deploying it in production.

Docker runs the applications in isolation with security, it allows you to run many containers on a host machine (A machine where many virtual machines are created and run). Containers are light weighted as they directly run within the host machine's kernel rather than running on hypervisor (It's a system that creates and runs virtual machines). It means you can run more containers on this hardware than if you were using virtual machines. You can run docker containers on virtual machines as well.

Docker Certified Associate (DCA) exam is designed by Docker to validate the skills.

2. What is the Docker Engine?

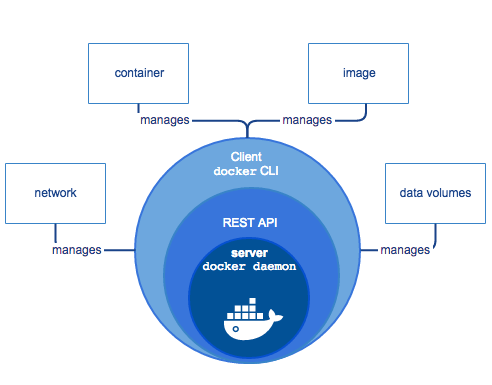

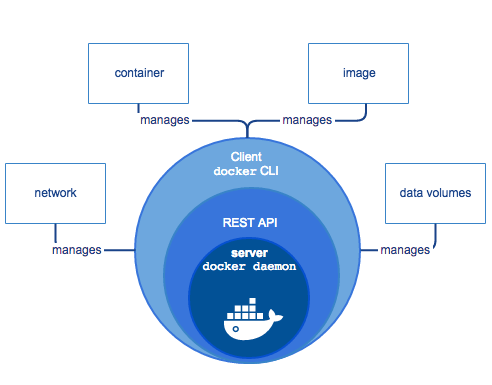

Docker Engine is an application which has client-server type architecture with these major components.

- Daemon Process ('dockerd' command) - It's a server which is a type of long running program.

- A Rest API - It provides an interface that programs or CLI use to talk to daemon processes and to instruct it.

- A CLI (Command Line Interface) client ('docker' command).

Source: Docker Overview

Source: Docker Overview3. What can you use Docker for?

You can use the docker for:

- Fast and consistent delivery of applications- means dockers allows developers to streamline their work by allowing them to work on local containers which provide their applications and services. Containers are best suited for CI (Continuous Integration) and CD (Continuous Delivery)

- Scaling and Responsive deployment - means docker's container based platform allows for highly portable workloads and docker containers can run on developers laptop, on physical or virtual machines, on cloud provider or in a hybrid environment.

- Running more workloads on the same hardware - means docker provides a cost effective alternative of hypervisor based virtual machines so that you can consume more compute capacity without changing the existing hardware. Docker is very lightweight and fast.

4. Give some Docker Example scenarios.

As Docker provides the consistent and fast delivery of applications, these are the below examples for that.

- Developers write the code and can share that with their team members using dockers containers.

- Docker is used to push the code and execute automated or manual tests in a Test environment.

- Developers can fix the bugs in the development environment and can push them to the test environment for testing.

- Giving fixes or updated applications to customers is easy as you can push the updated image to the Production.

5. Explain the Docker architecture.

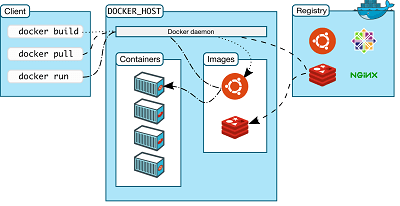

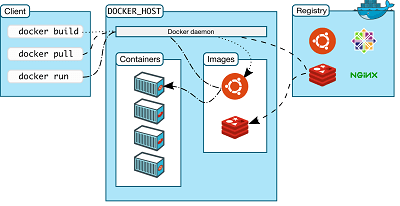

Docker is based on client-server architecture. Docker client talks to docker daemon (A long running program) via REST API over a network interface or UNIX sockets. Docker client and daemon can run on the same system or remote. Docker daemon is responsible for building, running and distributing the containers.

Source: Docker Architecture

- Docker Daemon - responsible to listen to API calls and manage docker objects. To manage Docker services it can communicate with other docker daemon as well.

- Docker Client - is used to communicate with docker daemon via REST APIs and can communicate with more than one daemon.

- Docker Registries - Docker images are stored in Docker registry. Docker Hub is a public registry and a default location for docker images configured in docker.

- Docker Objects - When you are using docker means you are going to use many things like images, containers, volume, networks and many others, these are called docker objects.

6. What is DTR (Docker Trusted Registry)?

If you are using Docker Data Center (DDC), then Docker provides an enterprise grade image storage solution called Docker Trusted Registry (DTR). DTR can be installed on virtual private networks or on-premises so that you can store your images in a secure way and behind your firewall. DTR also provides a User Interface that can be accessed by authorized users to view and manage the repositories.

7. What are the common Docker objects?

With Docker you use many things like Images, Containers, Registries, Services, Volumes etc. These all are Docker objects.

8. Explain the Docker Images.

A Docker Image is a read-only template with instructions that forms the basis of a container. It's an order collection of file-system changes. Image is based on another image with some customizations. For example, you can create an image that is based on 'ubuntu' image but you can install other application dependencies.

Images contain a set of run parameters (starting executable file) that will run when the container starts.

9. Why Images are light weight, fast and small?

Docker allows you to create your own image using a docker file with simple instructions needed to create an image. Each instruction creates layers in docker image. So when you make any changes in a docker file then only changed layers are rebuilt, not all, this is the reason that docker images are fast, small and light weight in comparison to other virtualization systems.

10. Describe Docker Containers?

Containers are created from Images or you can say Container is a runnable instance of an image. When you build your image and deploy the application with all the dependencies then multiple containers can be instantiated. Each container is isolated from one another and from the host machine as well. So A Docker Container is defined by an image and other configurations provided when you start or create it.

11. Explain the underlying technology of Docker.

Docker is developed in Go Language and has capability to use many Linux kernel features to deliver the functionality. Docker also relies on many other below technologies as well.

- Namespaces - Docker provides an isolated environment called container, that container is managed by namespaces. So Namespaces provide that isolation layer.

- Control groups - Control groups (cgroups) allow docker engines to share hardware resources to the containers.

- Union file systems - Building blocks for a container is managed by Union file system or UnionFS

- Container format - Namespaces, Control groups and UnionFS are combined into a wrapper by Docker Engine that's called Container Format.

12. What is Docker Swarm? How is it different from SwamKit and Swarm Model?

Docker Swarm is a docker native container orchestration tool. It's an older tool which is standalone from docker engine. It allows users to manage containers deployed across multiple hosts. So still it's a good choice for multi-host orchestration but Docker Swarm Model is recommended for new docker projects.

For more about Docker Swarm vs SwarmKit visit Difference between Docker Swarm, Swarm Mode & SwarmKit

13. What is Docker SwarmKit?

Docker SwarmKit is a separate project which implements the docker orchestration system. It's a toolkit that orchestrates distributed systems at any scale. SwarmKit's main benefits include:

- Distributed SwarmKit uses Raft Consensus Algorithm in order to co-ordinate and does not depend on a single point of failure to perform decisions.

- Secure WarmKit uses the mutual TLS for role authorization, node authentication and transport encryption.

- Simple It's simple to operate and does not need any external database to operate. It minimizes infrastructure dependencies.

For more visit

Docker SwarmKit 14. What is Dockerfile?

Dockerfile is a file that holds a set of instructions to create an image. Each instruction of Dockerfile is responsible for creating a layer in the image. When you rebuild the image, only changed layers are rebuilt.

15. What are the services?

A service is created to deploy an application image when the Docker engine is in swarm mode. A service is nothing here, just an image for a MicroService in the context of a larger application. Services examples might include databases, HTTP server or any executable program that you want to run in a distributed environment.

When creating a service you must specify the container image to use and commands to execute inside running containers. For more visit How services work in Docker.

16. Docker Swarm vs Kubernetes?

Docker Swarm and Kubernetes both are open-source container orchestration tools but come with some Pros and Cons.

Docker Swarm Pros:- It's built for use with Docker Engine and has its own Swarm API.

- Provide easy integration with tools such as Docker CLI and Docker Compose.

- Docker Swarm offers easy installation and setup for Docker environments.

- Offers various functionalities including - Internal load balancers, auto-scaling groups, swarm managers for availability control, etc.

Docker Swarm Cons:- It does not offer much customizations and extensions.

- Provides lesser functionalities when compared with Kubernetes.

Kubernetes Pros:- A very active development in code base by the open-source community.

- Well tested by Big organizations such as Google, IBM etc and works well on most operating systems.

- Offers various functionalities including - Load balancing, horizontal scalability, storage orchestration, automated rollouts and rollbacks, service discovery , self healing, intelligent scheduling and batch execution, etc.

Kubernetes Cons:- Specialized knowledge is required to manage the Kubernetes Master.

- The open-source community provides frequent updates, So special careful patching is required to avoid breaking changes.

- Require additional tools such as kubectl CLI, services, CI/CD and other practices to full management.

For more visit

Docker Swarm vs Kubernetes.

17. What is Containerization? And why is it being popular?

18. What are the docker client commands?

19. What are the docker registry commands?

20. What are the docker daemon commands?

21. Give an example of a simple Dockerfile.

22. What is the difference between RUN and CMD in Dockerfile?

23.Explain some quick facts about Docker.

Developers are considering Docker as a good choice to deploy the application any time, anywhere - OnPrem or Cloud. Let's know some facts about it.

- Docker was launched by Solomon Hykes in 2013. Now Solomon Hykes is the CTO and chief Architect of Docker.

- Docker allows us to build, ship, run and orchestrate the applications in an isolated environment.

- Docker is much faster than starting a virtual machine, but virtual machines are not obsolete yet.

- Docker Hub offers free options to host public repositories by developers and paid options for private repositories.

- Docker Desktop and Docker Compose usage has reduced the local development environment setup time that helps the developers to be productive.

24. What is the future of Docker?

Docker offers a quick way to build, develop, ship and orchestrate distributed applications in isolated environments.

Docker is being used by many companies to make developer's processes faster. Docker also provides automatic deployment management. So most companies are adopting this containerized approach for their application development and deployment.

Docker also provides integration with many hundreds of tools like Bitbucket, Jenkins, Kubernetes, Ansible, Amazon EC2 etc. So there are a lot of jobs in the market for Docker skills and Docker Professionals are being paid very good salaries.

Some General Interview Questions for Docker

1. How much will you rate yourself in Docker?

When you attend an interview, Interviewer may ask you to rate yourself in a specific Technology like Docker, So It's depend on your knowledge and work experience in Docker.

2. What challenges did you face while working on Docker?

This question may be specific to your technology and completely depends on your past work experience. So you need to just explain the challenges you faced related to Docker in your Project.

3. What was your role in the last Project related to Docker?

It's based on your role and responsibilities assigned to you and what functionality you implemented using Docker in your project. This question is generally asked in every interview.

4. How much experience do you have in Docker?

Here you can tell about your overall work experience on Docker.

5. Have you done any Docker Certification or Training?

It depends on the candidate whether you have done any Docker training or certification. Certifications or training are not essential but good to have.